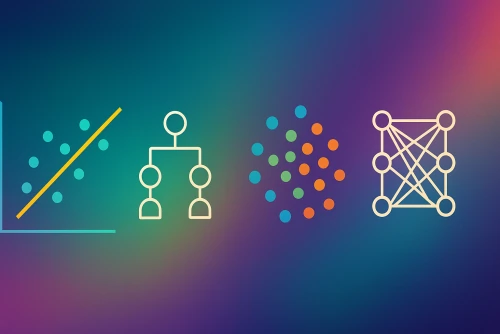

Machine Learning is powered by algorithms – sets of rules or instructions that tell a computer how to learn from data. There's a vast zoo of ML algorithms out there, each suited for different types of problems and data. For beginners, it can seem overwhelming!

This post provides a quick, high-level overview of some of the most common and fundamental ML algorithms. We won't dive deep into the math here, but rather focus on what they do and where they are typically used. For a broader understanding of where these algorithms fit, you might want to read our Introduction to Data Science or What is Machine Learning? guides.

1. Linear Regression

- Type: Supervised Learning (Regression)

- What it does: Tries to find a linear relationship between input features and a continuous output variable. Imagine drawing a straight line that best fits a scatter plot of data points.

- Analogy: Predicting a student's exam score based on the number of hours they studied. As study hours increase, the score tends to increase linearly (up to a point!).

- Use Cases:

- Predicting house prices based on features like size and location.

- Forecasting sales based on advertising spend.

- Estimating the impact of temperature on crop yield.

- Simple & Interpretable: It's one of the simplest ML algorithms and its results are easy to understand. You can learn how to evaluate its performance with our guide on Regression Metrics.

2. Logistic Regression

- Type: Supervised Learning (Classification)

- What it does: Despite its name, Logistic Regression is used for classification tasks, not regression. It predicts the probability that an input belongs to a particular class (usually one of two classes, like Yes/No or 0/1).

- Analogy: Predicting whether a student will pass or fail an exam based on study hours. It outputs a probability (e.g., 0.8 probability of passing).

- Use Cases:

- Spam email detection (spam or not spam).

- Predicting if a customer will click on an ad (click or not click).

- Medical diagnosis (e.g., predicting if a tumor is malignant or benign based on certain features).

- Foundation for Neural Networks: The logistic function (or sigmoid function) used in Logistic Regression is also a common activation function in neural networks.

3. Decision Trees

- Type: Supervised Learning (can be used for both Classification and Regression)

- What it does: Builds a tree-like model of decisions. It breaks down a dataset into smaller and smaller subsets while at the same time developing an associated decision tree. Each internal node represents a "test" on an attribute (e.g., is humidity > 70%?), each branch represents the outcome of the test, and each leaf node represents a class label (in classification) or a continuous value (in regression).

- Analogy: A game of "20 Questions," where you ask a series of yes/no questions to arrive at an answer.

- Use Cases:

- Customer churn prediction (will a customer leave or stay?).

- Identifying factors that lead to a loan default.

- Diagnosing medical conditions based on symptoms.

- Easy to Visualize & Understand: The tree structure is intuitive. However, single decision trees can be prone to overfitting.

- Basis for Powerful Ensembles: Decision Trees are the building blocks for more powerful algorithms like Random Forests and Gradient Boosted Trees.

4. Support Vector Machines (SVM)

- Type: Supervised Learning (primarily Classification, but can be adapted for Regression)

- What it does: Finds the hyperplane (a line in 2D, a plane in 3D, or a hyperplane in higher dimensions) that best separates data points belonging to different classes in the feature space. The goal is to maximize the margin (the distance) between the hyperplane and the nearest data points from each class (called support vectors).

- Analogy: Imagine finding the widest possible road that separates two groups of houses (two classes) on a map.

- Use Cases:

- Image classification.

- Text categorization.

- Bioinformatics (e.g., classifying proteins).

- Handwriting recognition.

- Effective in High-Dimensional Spaces: Works well even when there are many features. Can also use a "kernel trick" to handle non-linear data.

5. K-Means Clustering

- Type: Unsupervised Learning (Clustering)

- What it does: Aims to partition a dataset into 'K' distinct, non-overlapping clusters. It assigns each data point to the cluster whose mean (centroid) is nearest. The algorithm iteratively updates the centroids and reassigns data points until the clusters stabilize.

- Analogy: Sorting a mixed pile of T-shirts into K piles based on color, without knowing the color names beforehand.

- Use Cases:

- Customer segmentation (grouping customers with similar behaviors).

- Document clustering (grouping similar documents by topic).

- Image compression.

- Anomaly detection (points far from any cluster centroid might be anomalies).

- Simple & Fast: Relatively easy to implement and computationally efficient for large datasets. However, you need to specify the number of clusters (K) beforehand, and it can be sensitive to the initial placement of centroids.

6. Artificial Neural Networks (ANNs) & Deep Learning

- Type: Can be Supervised, Unsupervised, or Reinforcement Learning.

- What it does: Inspired by the human brain, ANNs are networks of interconnected nodes (neurons) organized in layers. Each connection has a weight that is adjusted during training. "Deep Learning" refers to ANNs with many hidden layers, allowing them to learn very complex patterns and hierarchical features from data. Understanding the key concepts of features, labels, and models is foundational here.

- Analogy: A simplified model of how neurons in the brain process information and learn.

- Use Cases (especially Deep Learning):

- Image recognition and computer vision (e.g., self-driving cars).

- Natural Language Processing (NLP) (e.g., machine translation, chatbots, sentiment analysis).

- Speech recognition.

- Recommendation systems.

- Drug discovery.

- Powerful but Complex: Can achieve state-of-the-art performance on many tasks, especially with large datasets. However, they often require significant computational resources and large amounts of data for training, and can be harder to interpret (the "black box" problem). The future of machine learning trends includes efforts to make these more explainable.

Many More Algorithms Exist!

This is just a small selection of common ML algorithms. Others include:

- Naive Bayes: A probabilistic classifier based on Bayes' theorem.

- K-Nearest Neighbors (KNN): A simple algorithm that classifies a new data point based on the majority class of its 'k' nearest neighbors.

- Random Forests: An ensemble method that builds multiple decision trees and combines their outputs.

- Gradient Boosting Machines (GBM): Another powerful ensemble method that builds trees sequentially, where each tree corrects the errors of the previous ones.

- Principal Component Analysis (PCA): An unsupervised learning algorithm for dimensionality reduction.

Choosing the right algorithm depends on the nature of your problem (classification, regression, clustering), the size and type of your data, the desired performance, and interpretability requirements. Effective feature engineering and data cleaning also play crucial roles.

As you continue your ML journey, you'll become more familiar with these algorithms and learn when and how to apply them effectively.

Which of these algorithms sounds most interesting to you and why?

Comments